Final Grasping Results and Integration with DSR

DATE : August 7, 2020

In this post, I show the final results of object grasping, using the entire pipeline. Also, I share our integration strategy with DSR graph and the new architecture.

Final Grasping Results (Second Demo)

In the previous post, I showed the complete system integration and the results of path planning using the estimated poses. However, the system should be tested on object grasping and manipulation, as well. Consequently, I managed to extend the embedded Lua scripts with gripper control functions. Then, viriatoGraspingPyrep component was updated to call these scripts using PyRep API. This way, we have a complete grasping pipeline in viriatoGraspingPyrep component, which goes as follows :

viriatoGraspingPyrepcomponent opens the arm gripper through embedded Lua scripts.viriatoGraspingPyrepcomponent captures the RGBD signal from the shoulder camera and passes it to pose estimation components.- Pose estimation components estimate objects’ poses and send them back to

viriatoGraspingPyrepcomponent. viriatoGraspingPyrepcomponent, then, creates a dummy with the estimated pose and calls the embedded Lua scripts to perform path planning.- The arm gripper is, then, closed and thus the object is grasped.

- From there, we can create other dummies for the arm to manipulate or move the object to another position.

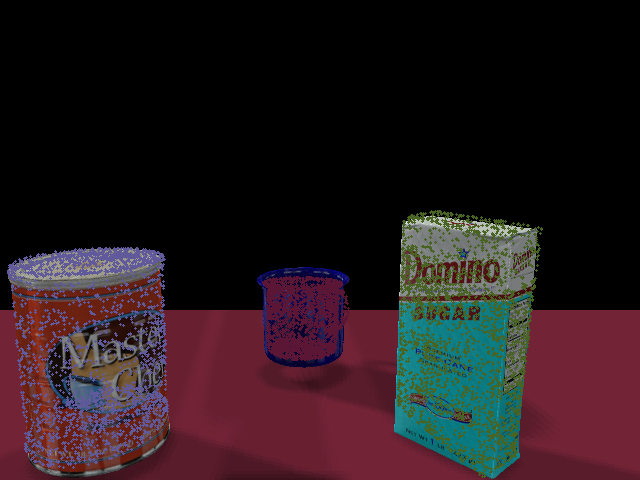

Following this procedure, I managed to integrate the full grasping pipeline and create a full demo for grasping using DNN-estimated poses. I used an ensemble of both RGB and RGBD estimated poses to perform pose estimation. Also, I included multiple objects in the scene to provide more challenge for both pose estimation and grasping.

DSR Integration Strategy

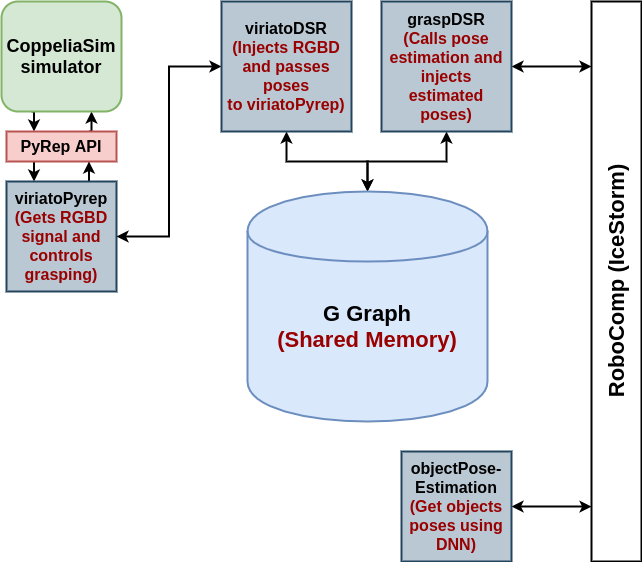

As shown in the figure, the components workflow goes as follows :

-

viriatoPyrepcomponent streams the RGBD signal from CoppeliaSim simulator using PyRep API and publishes it to the shared graph throughviriatoDSRcomponent. -

graspDSRcomponent reads the RGBD signal from shared graph and passes itobjectPoseEstimationcomponent. -

objectPoseEstimationcomponent, then, performs pose estimation using DNN and returns the estimated poses. -

graspDSRcomponent injects the estimated poses into the shared graph and progressively plans dummy targets for the arm to reach the target object. -

viriatoDSRcomponent, then, reads the dummy target poses from the shared graph and passes it toviriatoPyrepcomponent. -

Finally,

viriatoPyrepcomponent uses the generated poses bygraspDSRto progressively plan a successful grasp on the object.

Important Dates

- August 2, 2020 :

Finish the whole grasping pipeline and create a complete demo.

Commit : https://github.com/robocomp/grasping/commit/e503dd4ef2afd8b1b351bdc684df0cda54c73529

- August 3, 2020 :

Merge the two pose estimation components into objectPoseEstimation component for integration with DSR.

Commit : https://github.com/robocomp/grasping/commit/affe68dbe0a0866e25c39608c96e1b02b453e8a0

Upcoming Work

-

Start working on

graspDSRcomponent. -

Test the grasping pipeline through the new architecture.

-

Write a full documentation on integration with DSR.

-

Final evaluation and code submission.

Mohamed Shawky