Final Project Submission

DATE : August 24, 2020

This is the final post of DNN’s for precise manipulation of household objects project. Here, I show the work done and the results of the project. Also, I discuss some future improvements and link to all my contributions during the project.

Brief Description

The ability of a robot to detect, grasp and manipulate objects is one of the key challenges in building an intelligent collaborative humanoid robot. In this project, I, Mohamed Shawky, worked with RoboComp and my mentor, Pablo Bustos, to develop fast and robust pose estimation and grasping pipeline using the power of RoboComp framework and recent advances in Deep Learning. I used two recent DNN architctures, which are Segmentation-driven 6D Object Pose Estimation and PVN3D to build pose estimation component that estimates objects poses from RGBD images. Also, I was able to integrate the work done with Kinova Gen3 arm with PyRep API for a fast and robust path planning and grasping workflow. Finally, I worked on the integration of pose estimation and grasping into CORTEX archiecture (based on Deep State Representation (DSR) and implemented using Real-time Pub/Sub (RTPS) and Conflict-Free Replicated Data Type (CRDT)).

Completed Work

Here is a summary of all the work done through each period of the project.

Community Bonding Period

-

Communicated with my mentor, Pablo Bustos, to discuss the previous work on the grasping problem and form detailed project workflow.

-

Familiarized myself more with RoboComp framework and 6D object pose estimation literature.

-

Started working on

Segmentation-driven 6D Object Pose Estimationnetwork implementation based on open-source inference code.

First Coding Period

-

Completed a full implementation of

Segmentation-driven 6D Object Pose Estimationnetwork. -

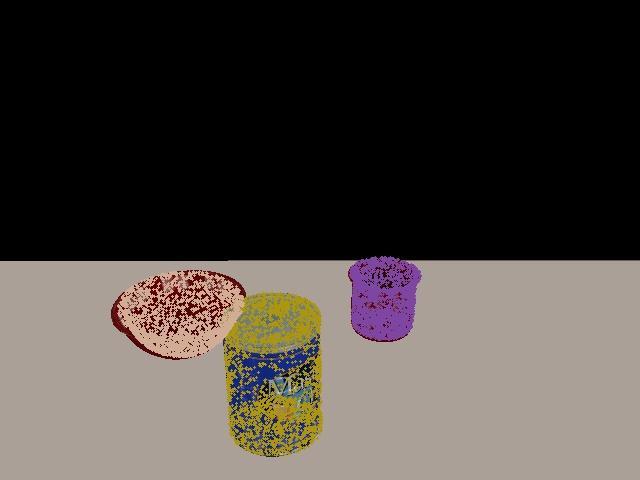

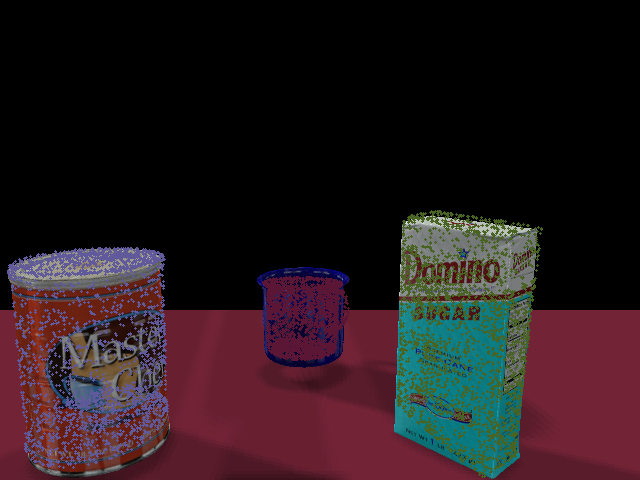

Trained the network on

YCB-Videosdataset, which is one of the most diverse open-source datasets. -

Tested the trained network on

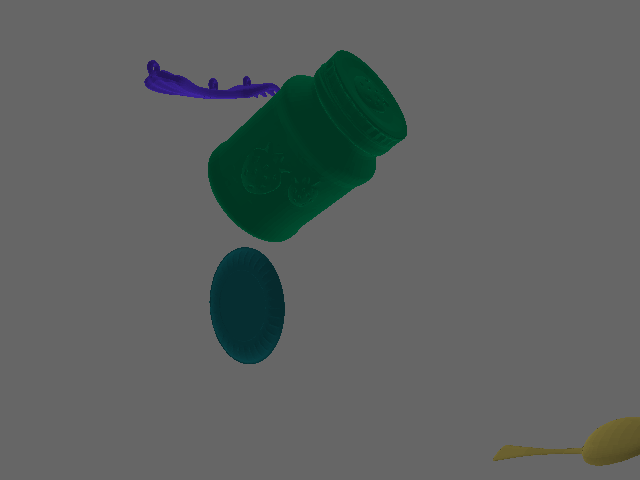

YCB-Videosdataset and data collected fromCoppeliaSimenvironments. -

Implemented a Python data collector using PyRep API, Open3D and CoppeliaSim to collect more data for augmentation.

-

Retrained the network on augmented dataset.

Second Coding Period

-

Improved the implementation of

Segmentation-driven 6D Object Pose Estimationnetwork through using focal loss and fixing some training issues. -

Retrained the network on the final settings.

-

Investigated further improvements of pose estimation pipeline, which led me to

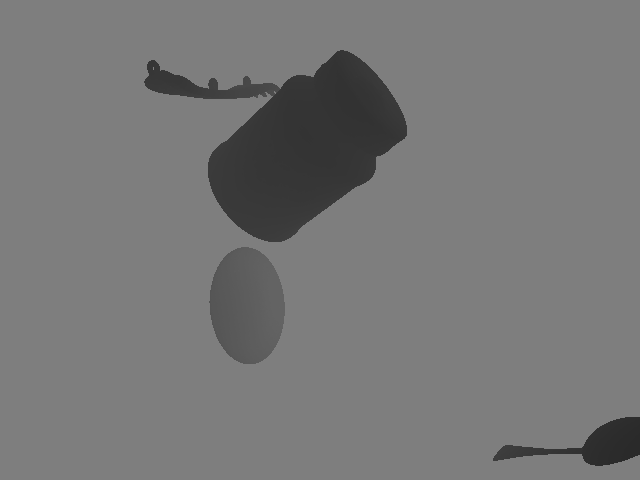

PVN3Dnetwork. -

Studied

PVN3Dnetwork and integrated its open-source implementation to a new RoboComp component. -

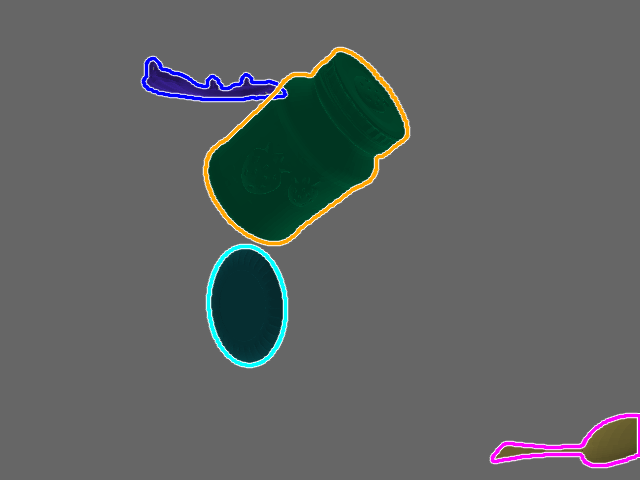

Completed two pose estimation components. One for RGB inference using

Segmentation-driven 6D Object Pose Estimationnetwork and another for RGBD inference usingPVN3Dnetwork. -

Adapted the previous work with Kinova Gen3 arm to PyRep API, where I added embedded Lua scripts to the arm’s model and called them, remotely, through PyRep API. Thus, we have a fast and robust workflow for path planning and grasping.

-

Implemented

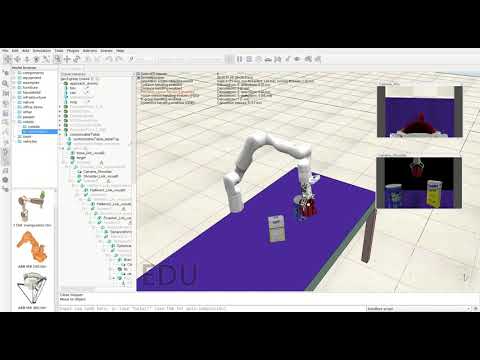

viriatoGraspingPyrepcomponent, which integrates and tests the whole pose estimation and grasping pipeline.

Third Coding Period

-

Created the first complete grasping demo of Kinova Gen3 arm.

-

Studied the workflow of the new DSR architecture through installation, testing and code review.

-

Discussed the process of pose estimation and grasping integration into DSR with my mentor, Pablo Bustos.

-

Merged both RGB and RGBD pose estimation components into one component, named

objectPoseEstimation, for DSR integration. -

Implemented an object detection component that uses

RetinaNetto improve object tracking in DSR. -

Implemented

graspDSRC++ agent, which is an interface betweenobjectPoseEstimationcomponent and the shared graph (a.k.a. G). Also, it plans the dummy targets for the robot arm to reach the required object. -

Tested the validity of the arm’s embedded Lua scripts using DSR.

-

Helped debugging and improving some features of DSR Core API.

-

Wrote a detailed documentation about DSR integration and its problems.

-

Improved visualization and memory allocation in pose estimation components.

-

Revisited all the previous work and improved some documentation.

Results and Demonstrations

Contributions Summary

| Repo | Commits | PRs | Issues |

|---|---|---|---|

| robocomp/grasping | link | - | - |

| robocomp/dsr-graph | link | link | link |

| robocomp/robocomp | link | link | link |

| robocomp/DNN-Services | link | - | - |

| robocomp/web | link | link | - |

Future Work and Improvements

The work with this project has been completed successfully with full documentation and a decent set of demos. However, there is still some work to be done with DSR framework to be able to completely use the implemented pose estimation and grasping pipeline through DSR. This work can be summarized as follows :

-

Solve the related open issues on

robocomp/robocomprepo. -

Update

viriatoDSRto pass the arm target poses toviriatoPyrepfrom G. -

Add grasping methods to

viriatoPyrep, similar toviriatoGraspingPyrep. -

Test the pose estimation and grasping pipeline with tracking and social navigation, once done.

Mohamed Shawky